Database Reference

In-Depth Information

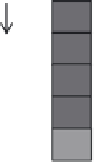

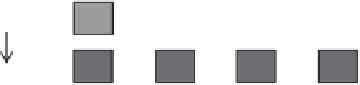

Figure 1.3. The parallel parts of a program can be run either concurrently or in par-

allel on a single machine, or in a distributed fashion across machines. Programmers

parallelize their sequential programs primarily to run them faster and/or achieve

higher throughput (e.g., number of data blocks read per hour). Specifically, in an

ideal world, what programmers expect is that by parallelizing a sequential program

into an

n

-way distributed program, an

n

-fold decrease in execution time is obtained.

Using distributed programs as opposed to sequential ones is crucial for multiple

domains, especially for science. For instance, simulating a single protein folding

can take years if performed sequentially, while it only takes days if executed in a

distributed manner [67]. Indeed, the pace of scientific discovery is contingent on how

fast some certain scientific problems can be solved. Furthermore, some programs

have real time constraints by which if computation is not performed fast enough, the

whole program might turn out to be useless. For example, predicting the direction of

hurricanes and tornados using weather modeling must be done in a timely manner

or the whole prediction will be unusable. In actuality, scientists and engineers have

relied on distributed programs for decades to solve important and complex scientific

problems such as quantum mechanics, physical simulations, weather forecasting, oil

and gas exploration, and molecular modeling, to mention a few. We expect this trend

to continue, at least for the foreseeable future.

Distributed programs have also found a broader audience outside science, such as

serving search engines, Web servers, and databases. For instance, much of the success

of Google can be traced back to the effectiveness of its algorithms such as PageRank

[42]. PageRank is a distributed program that is run within Google's search engine over

thousands of machines to rank web pages. Without parallelization, PageRank cannot

achieve its goals effectively. Parallelization allows also leveraging available resources

effectively. For example, running a Hadoop MapReduce [27] program over a single

Amazon EC2 instance will not be as effective as running it over a large-scale cluster

of EC2 instances. Of course, committing jobs earlier on the cloud leads to fewer dollar

costs, a key objective for cloud users. Lastly, distributed programs can further serve

greatly in alleviating subsystem bottlenecks. For instance, I/O devices such as disks and

S1

Spawn

S1

P1

P2

P3

P4

S2

P1

P2

P3

P3

Join

S2

(a)

(b)

FIGURE 1.3

(a) A sequential program with serial (

S

i

) and parallel (

P

i

) parts. (b) A parallel/

distributed program that corresponds to the sequential program in (a), whereby the parallel

parts can be either distributed across machines or run concurrently on a single machine.

Search WWH ::

Custom Search