Information Technology Reference

In-Depth Information

τ Ξ

Ag

Y

Ξ

AG

X

No Generalizations

Trust

(AgY,

τ

)

AgX

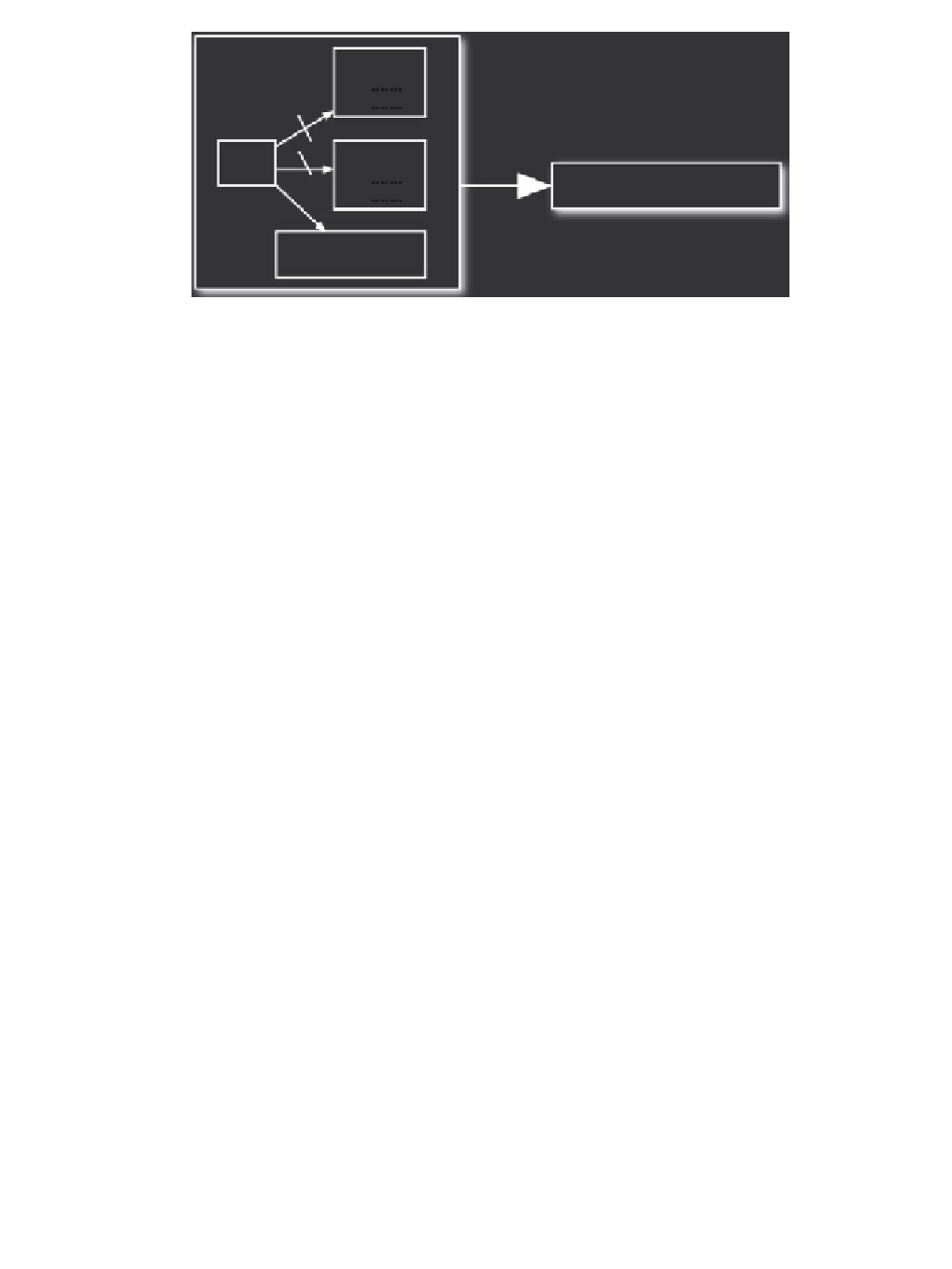

Figure 6.13

Generalization in case of

Ag

X

's ignorance about task's properties and trustee's features.

(Reproduced with kind permission of Springer Science+Business Media

C

2008)

of

Ag

Y

. So we can conclude (see Figure 6.13) that in

caseA

there is no possible rationale,

grounded generalization to other tasks or agents: in fact the set of

(a1, a2, a3) do not permit

generalizations.

In fact, the only possibility for a rational generalization in this case is given from an indirect

generalization by

Ag

X

. For example someone else can suggest to

Ag

X

that on the basis of

his first trustworthy attitude he can trust another agent or another task because there is an

analogy between them: in this case

Ag

X

, trusting this suggestion, acquires the belief for an

indirect generalization. The second case (

caseB

) we consider is when

Ag

X

does not know

Ag

Y

's features, but he knows

(also in this case for several

possible reasons different from inferential reasoning on the match between properties and the

features). In more formal terms:

τ

's properties, and he trust

Ag

Y

on

τ

b1)

Trust

Ag X

(

Ag

Y

,τ

)

b2)

¬

Bel

Ag X

(

f

AgY

≡{

f

1

,...,

f

n

}

)

p

1

,...,

p

k

}∪{

b3)

Bel

Ag X

(

τ

≡{

p

1

,...,

p

m

}

)

Despite the ignorance about

Ag

Y

's features (

b2

),

Ag

X

can believe that

Ag

Y

is trustworthy on

a different (but in some way analogous) task

τ

(generalization of the task) just starting from

the previous cognitive elements (

b1

and

b3

) and from the knowledge of

τ

'

's properties. He

τ

and decide if and when to trust

Ag

Y

on a different task. It is not possible to generalize with respect to the agents because there

is no way of evaluating any analogies with other agents.

So we can conclude (see Figure 6.14) that in the case (

B

) task generalization is possible: in

fact the set

(b1, b2, b3) permits task generalizations but does not permit agent generalizations.

Also, in this case we can imagine an

indirect

agent generalization. If

Ag

X

trusts a set of

agents

AG1

can evaluate the overlap among the core properties of

τ

and

τ

,

n

≡

{

Ag

Y

,

Ag

W

...

,

Ag

Z

}

on a set of different but similar tasks

T1

≡

{

τ

,

...

,

τ

}

(he can evaluate this similarity given his knowledge of their properties) he can trust each of

the agents included in

AG1

on each of the tasks included in

T1

.

The third case (

caseC

) we consider is when

Ag

X

does not know

τ

's properties, but he

knows

Ag

Y

's features, and he trusts

Ag

Y

on

τ

(again for several possible reasons different from

Search WWH ::

Custom Search