Information Technology Reference

In-Depth Information

terms threshold, English has three metrics with the best results, the one already

mentioned and

Least Median Tf-Idf and Phi-Square

.

As pointed above, Rvar and MI metrics alone were unable to discriminate the top

5, 10 or 20 best ranked terms. This probably explains the need to use the Least and

Median operators proposed by [1,2]. Precision for the Rvar and MI derived metrics is

shown in table 3. It shows clearly that Tf-Idf and Phi Square based metrics, in table 2,

are much better than those based on Rvar and MI. They get for the best metrics,

values a bit higher than 0.50, and generally all bellow 0.50 which makes the average

precision for these metrics rather poor.

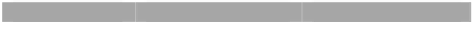

Table 2.

Average precision values for the 5, 10 and 20 best terms using the best metrics, and

average for each threshold

Czech

Metric

Prec. (5)

Prec. (10)

Prec. (20)

Tf-Idf

0.90

0.86

0.66

L Tf-Idf

0.75

0.70

0.61

LM Tf-Idf

0.70

0.65

0.59

LB Tf-Idf

0.80

0.68

0.65

LBM Tf-Idf

0.65

0.68

0.66

0.70

0.70

0.61

ϕ2

0.70

0.60

0.58

L ϕ2

LM

ϕ

2

0.70

0.60

0.58

0.55

0.63

0.55

LB ϕ2

0.55

0.65

0.59

LBM ϕ2

Average

0.72

0.68

0.61

English

Metric

Prec. (5)

Prec. (10)

Prec. (20)

Tf-Idf

0.84

0.74

0.67

L Tf-Idf

0.78

0.66

0.68

LM Tf-Idf

0.81

0.78

0.66

LB Tf-Idf

0.85

0.66

0.65

LBM Tf-Idf

0.82

0.69

0.62

0.84

0.78

0.68

ϕ2

0.83

0.76

0.69

L ϕ2

LM

ϕ

2

0.87

0.78

0.70

0.83

0.74

0.62

LB ϕ2

0.80

0.74

0.65

LBM ϕ2

Average

0.83

0.73

0.66

Portuguese

Metric

Prec. (5)

Prec. (10)

Prec. (20)

Tf-Idf

0.69

0.70

0.66

L Tf-Idf

0.64

0.66

0.65

LM Tf-Idf

0.68

0.63

0.64

LB Tf-Idf

0.86

0.71

0.65

LBM Tf-Idf

0.83

0.70

0.68

0.73

0.73

0.62

ϕ2

L

ϕ

2

0.68

0.64

0.59

0.61

0.64

0.59

LM

ϕ

2

0.60

0.65

0.65

LB ϕ2

0.62

0.61

0.62

LBM ϕ2

Average

0.70

0.67

0.63