Information Technology Reference

In-Depth Information

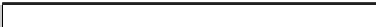

Table 5.1.

Dataset description

Dataset

Original size Dims

Classes

Aggregation size Evaluation

Wave

300

21

3

30

leave-1-out

Pima

768

8

2

77

leave-1-out

Shuttle

58000

9

7

594

10-fold

Segment

2310

19

7

319

10-fold

Ringnoise

50000

4

2

500

10-fold

Bank8FM

4499

8 continuous

450

10-fold

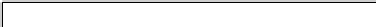

Table 5.2.

SVM classification and regression results

Dataset

Accuracy % Mean squared error

Wave

80.00

0.46

Pima

79.22

0.21

Shuttle

94.78

1.10

Segment

91.22

1.70

Ringnoise

84.20

0.19

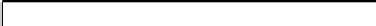

Table 5.3.

One-class SVM results

Dataset

Outliers

Shuttle

9

Bank8FM

6

classification, regression and novelty detection tasks. There is no experimental

result with interval data mining provided by other algorithms. Therefore, we

only report results obtained by our approach, it is dicult to compare with the

other ones.

5.3.4

Other Kernel-Based Methods

Many multivariate statistics algorithms based on generalized eigenproblems can

also be kernelized [21], e.g. Kernel Fisher's Discriminant Analysis (KFDA), Ker-

nel Principal Component Analysis (KPCA) or Kernel Partial Least Squares

(KPLS). These kernel-based methods can also use the RBF kernel function de-

scribed in section 2 to deal with interval data. We use KPCA and KFDA to

visualize datasets in the embedding space where the user can intuitively see the

separating boundary between the classes based on human pattern recognition

capabilities.

The eigenvectors of the data can be used to detect directions of maximum

variance, and thus, linear PCA is to project data onto principal components by

solving an eigenproblem. By using a kernel function instead of the linear inner