Information Technology Reference

In-Depth Information

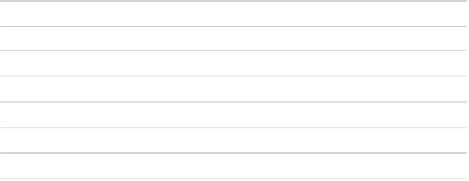

Generalization error of noisy data

80

70

60

50

AdaBoost M1

BrownBoost

AdaBoostHyb

40

30

20

10

0

Databases

Fig. 3.3.

Rate of error on Noisy data

However this increase remains always inferior with that of the traditional

approach except for the databases such as Credit-A, Hepatitis and Hypotyroid.

So, we studied these databases and we observed that all these databases have

missing values. In fact, Credited, Hepatitis and Hypothyroid have respectively

5%, 6% and 5,4% of missing values. It seems that our improvement loses its

effect with accumulation of two types of noise: missing values and artificial noise,

although the algorithm AdaBoost

Hyb

improves the performance of AdaBoost

against the noise. Using test-student, we find a significant p-value 0.0352 and a

average gain of 4.6 compared to AdaBoost. Considering Brownboost, we remark

that it gives better error rates that AdaboostM1 on all the noisy data sets.

However, It gives better error rates than our proposed method, only with 6 data

sets. Our proposed method gives better error rates with the other 9 data sets. We

haven't a significant p-value but a average gain of 4.4 compared to AdaBoost.

This encourages us to study in details the behavior of our proposed method on

noisy data.

3.4.4

Comparison of Convergence Speed

In this part, we are interested in the number of iterations that allow the algo-

rithms to converge, i.e. where the error rate is stabilized. Table 2 and graphic 4

shows us that the hybrid approach allows AdaBoost to converge more quickly.

Indeed, the error rate of AdaBoost M1 is not stabilized even after 100 iterations,

whereas Adaboost Hyb converges after 20 iterations or even before.