Information Technology Reference

In-Depth Information

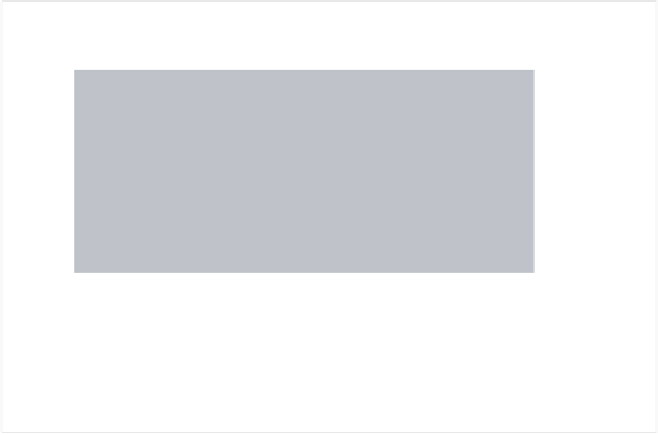

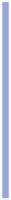

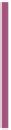

Comparison of recall

100

80

AdaBoost M1

BrownBoost

AdaBoostHyb

60

40

20

0

Databases

Fig. 3.2.

Rate of recall

important error rates. Based on this results, we use test-student and we find a

significant p-value 0.0010. We have a significant average gain of 4.8 compared to

AdaBoost.

Considering Brownboost, we remark that it improves the recall of Ad-

aBoostM1, overall the data sets (except the TITANIC one). However, the recall

rates given by our proposed algorithm are better than those of BrownBoost.

Except, with the zoo dataset. In this case, we have a significant p-value 0.0002

and not a significant average gain (1.4)compared to AdaBoost according to the

results given by AdaBoosthyb.

It is also noted that our approach improves the recall in the case of the

Lymph base where the error was more important. It is noted though that the

new approach does not act negatively on the recall but it improves it even when

it can not improve the error rates.

3.4.3

Comparison with Noisy Data

In this part, we are based on the study already made by Dietterich [6] by adding

random noise to the data. This addition of noise of 20% is carried out, for each

one of these databases, by changing randomly the value of the predicted class

by another possible value of this class.

Graphic 3 shows us the behavior of the algorithms with noise. We notice

that the hybrid approach is also sensitive to the noise since the error rate in

generalization is increased for all the databases.