Database Reference

In-Depth Information

11)

Re-run the model. You do not need to switch back to the main process to re-run the

model. You may if you wish, but you can stay in sub-process view and run it too. When

you return from results perspective to design perspective, you will see whichever design

window you were last in. When you re-run the model, you will see a new performance

matrix, showing the model's predictive power using Gini as the underlying algorithm.

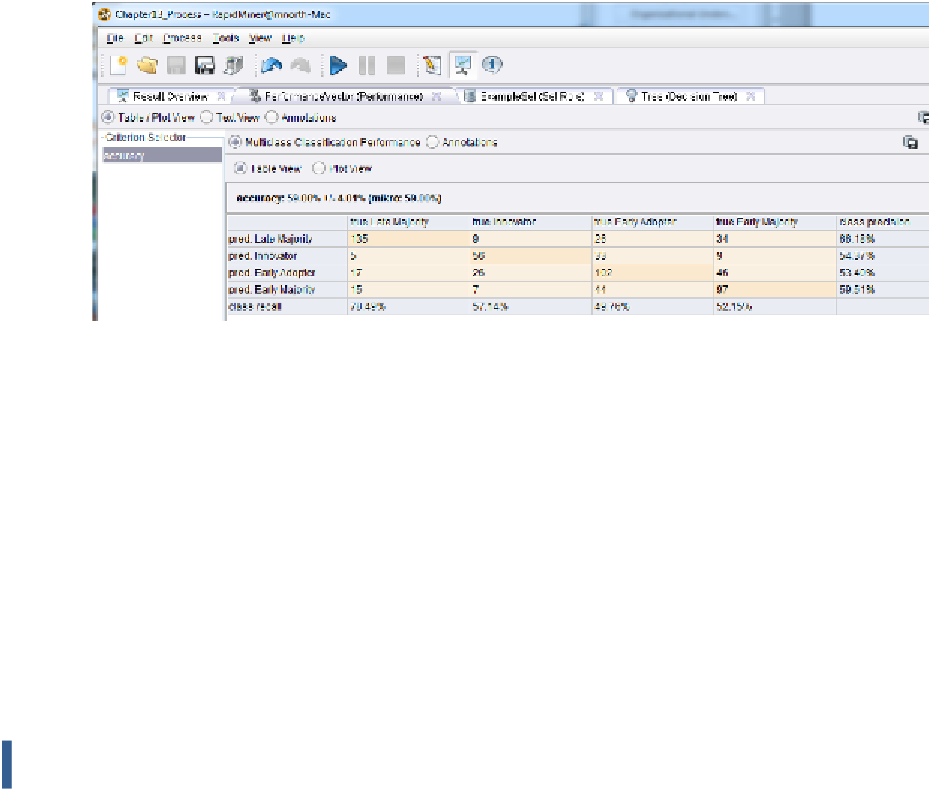

Figure 13-10. New cross-validation performance results based on

the gini_index decision tree model.

We see in Figure 13-10 that our model's ability to predict is significantly improved if we

use Gini for our decision tree model. This should also not come as a great surprise. We

knew from Chapter 10 that the granularity in our tree's detail under Gini was much greater.

Greater detail in the predictive tree

should

result in a more reliably predictive model.

Feeding more and better training data into the training data set would likely raise this

model's reliability even more.

CHAPTER SUMMARY: THE VALUE OF EXPERIENCE

So now we have seen one way to statistically evaluate a model's reliability. You have seen that

there are a number of cross-validation and performance operators that you can use to check a

training data set's ability to perform. But the bottom line is that there is no substitute for

experience and expertise. Use subject matter experts to review your data mining results. Ask them

to give you feedback on your model's output. Run pilot tests and use focus groups to try out your

model's predictions before rolling them out organization-wide. Do not be offended if someone

questions or challenges the reliability of your model's results—be humble enough to take their