Information Technology Reference

In-Depth Information

Q7.

creating a lesson based on lessons

created by other authors?

New issues:

Q8.

adding collaborative authoring (i.e.

tagging, rating, commenting on con-

tent)?

Q9.

adding authoring for collaboration (i.e.

defining groups of authors, subscribing

to other authors)?

ing, keyword access, copying, linking of concepts,

lesson creation and reuse, and collaborative au-

thoring). Moreover, the variance for most of these

questions is less for the new system, showing a

higher level of agreement between testers. The

mean is very slightly higher for the first question

for the first system. Looking at the qualitative

comments, the only criticism is about the domain

maps not being in alphabetical order. In the follow-

up implementations, we have already introduced

various ways of ordering the domain maps beside

the default ones (which are based on the order of

creation).

More worrisome for the MOT 2.0 imple-

mentation is the fact that Q9 on authoring for

collaboration was scored lower, suggesting that

at least some of the expectations of the design-

ers had not been fulfilled. One designer who had

given it a score of 3 was complaining about the

rights related to these groups and the exact pro-

cedure for forming them. In the version we had

given them to test, groups were pre-formed and

joining and leaving groups was open to all. An

administrator role is necessary for allowing for

group formation, since people could otherwise be

inviting others into their own groups, as well as

restricting unwanted persons from joining their

groups. Such functionality is clearly desirable and

has been taken into consideration in the follow-up

developments.

Note that questions 1-7 are general function-

ality questions. This functionality was present in

both systems, but there were changes (we were

trying to find out if they were improvements) in

MOT 2.0. Questions 8 and 9 address collaboration

functionality - MOT 2.0 had this functionality

implemented, whereas MOT 1.0 did not have it.

Thus, the question was kept generic, in order to

refer to future extensions, in the case of MOT 1.0,

and to actual implemented features, in MOT 2.0.

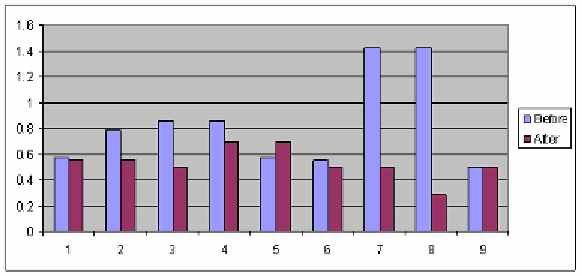

Figure 9 shows the mean response of the au-

thors (the Softwin designers) for the two systems,

whilst Figure 10 shows the variance. Due to the

small number of designers used in this study, we

cannot speak directly about statistical significance.

Instead, we can observe the general preferences.

Overall, both systems scored above the expected

average of 2.5 (in fact, they scored above 3).

There is a slight preference for the functional-

ity of the new system in all aspects (lesson brows-

Figure 10. The variance before (MOT 1.0) and after (MOT 2.0) by the authors

Search WWH ::

Custom Search