Information Technology Reference

In-Depth Information

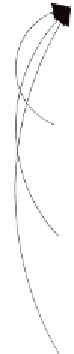

(Elman, 1990). The recurrent connections feed information from the past execution cycle back into

the network. This permits a neural network to learn patterns through time. Thus the same recurrent

networks with the same weights and given the same inputs may result in different outputs depending

on the feedback signals currently held in the network. In our experiments we will use the two previ-

ously described training methods, the variable learning rate with momentum and early stopping based

on cross-validation set error and the Levenberg-Marquardt with Automated Bayesian Regularization

training algorithm. The addition of recurrent connections also increases the size of the network by the

number of hidden layer neurons squared.

Figure 12. Recurrent subset of neural network design

Recurrent Subset of Supply Chain Demand Modeling

Neural Network Design

rw

1

Neuron

1

rw

2

Bias

Neuron

2

Legend:

h = Hidden Layer Neurons

rw = Recurrent Weights

Bias

Neuron

3

Bias

Neuron

h

rw

(h*h)

Bias

Search WWH ::

Custom Search