Biomedical Engineering Reference

In-Depth Information

Artificial Neural Networks (ANNs)

Mizutani, 1997), this chapter will focus on the

MLP. Figure 2 provides an overview of the struc-

ture of this ANN. In this case, the ANN has three

layers of neurons—an input layer, a hidden layer

and an output layer. Each neuron has a number

of inputs (from outside the neural network or the

previous layer) and a number of outputs (lead-

ing to the subsequent layer or out of the neural

network). A neuron computes its output response

based on the weighted sum of all its inputs ac-

cording to an activation function. Data flows in

one direction through this kind of neural network

starting from external inputs into the first layer,

which are transmitted through the hidden layer,

and then passing to the output layer from which

the external outputs are obtained.

The ANN is trained by adjusting the weights

that connect the neurons using a procedure called

back-propagation (Bryson & Ho, 1975). In this

procedure, for a given input-output pair, the back-

propagation algorithm performs two phases of

data flow (see Figure 2). First, the input pattern

is propagated from the input layer to the output

layer and, as a result of this forward flow of data,

it produces an actual output. Then the error sig-

ANNs are a powerful set of tools for solving

problems in pattern recognition, data processing,

non-linear control and time series prediction.

They offer an effective approach for handling

large amounts of dynamic, non-linear and noisy

data, especially when the underlying physical

relationships are not fully understood (Cannas

et al., 2006).

At heart, ANNs are distributed, adaptive,

generally nonlinear learning machines comprised

of different processing elements called neurons

(Bishop, 1995). Each neuron is connected with

other neurons and/or with itself. The intercon-

nectivity defines the topology of the ANN. The

connections are scaled by adjustable parameters

called weights. Each neuron receives either exter-

nal inputs or inputs from other neurons to which

the neuron is connected and produces an output

that is a nonlinear static function of the weighted

sum of these inputs.

Although there are now a significant number

of neural network types and training algorithms

(Hagan et al., 1996; Haykin, 1994; Jang, Sun, &

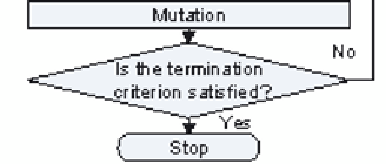

Figure 1. A basic genetic algorithm

Search WWH ::

Custom Search