Biomedical Engineering Reference

In-Depth Information

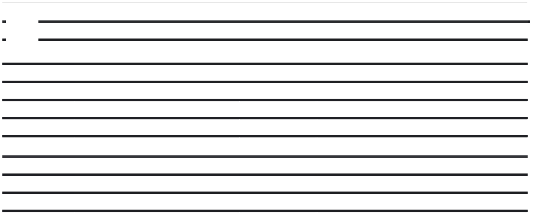

Table 1. Average clustering results on 10 runs of these algorithms, where

n

indicates the number of

c

p

is the correct cassification percentage (that is, the percentage of simulations in

obtained clusters,

n

=

n

) and Err. is the average error percentage.

which

a

n

=3

n

=4

K

RL

IRL

RL

IRL

n

p

Err.

n

p

Err.

n

p

Err.

n

p

Err.

cc

cc

cc

cc

75

3.1

90

0.53

3.1

90

0.53

4.7

60

3.46

4.1

90

0.26

150

3.3

70

0.33

3.1

90

0.13

4.8

70

1.06

4.4

80

0.73

300

3.7

70

0.60

3.3

80

0.43

5.5

30

3.06

4.6

60

4.80

450

3.5

70

0.37

3.1

90

0.17

4.9

60

0.55

4.4

80

0.31

600

3.2

80

0.25

3.2

80

0.25

5.4

50

0.61

4.6

50

0.31

750

3.3

80

0.06

3.0

100

0.00

5.4

30

3.49

4.6

60

3.12

900

3.2

90

3.23

3.0

100

0.00

5.5

20

0.56

4.8

40

0.42

Av.

3.3

78

0.76

3.1

90

0.21

5.2

46

1.82

4.5

66

1.42

concept. This concept is thus formed and learned.

So, the process of formation and learning concepts

can be seen in Figure 3.

SIMULATIONS

a.

In order to show the ability of RL and IRL

to perform a clustering task, as mentioned

above, several simulations have been made

whose purpose is the clustering of discrete

data.

Iterative relationships learning method:

RL

can be improved in many ways. In this sense, an

iterative approach, IRL, to enhance the solution

given by RL, is presented.

Several datasets have been created, each of

them formed by

K

50-dimensional patterns ran-

domly generated around

n

centroids, whose com-

ponents were integers in the interval [1,10]. That

is, the

n

centroids were first generated and input

patterns were formed from them by introducing

some random noise modifying one component

of the centroid with probability 0.018. So, the

Hamming distance between input patterns and

the corresponding centroids is a binomial distribu-

tion B(50,0.018). Patterns are equally distributed

among the

n

clusters. It must be noted that pat-

terns may have Hamming distance even 5 or 6

from their respective centroid, and new clusters

can be formed by this kind of patterns.

So, a network with

N =

50 neurons taking

value in the set

Suppose that, by using equation (5.1) of RL,

matrix

W

X

related to pattern set

X X k

∈

has been learned and denote by

Y

(

k

)

the stable state

reached by the network (with weight matrix

W

X

)

when beginning from the initial state given by

V

=

X

(

k

)

.

Then, the cardinal of

(

k

)

= {

:

}

k

Y k

∈

is (no

multiplicities) the number of classes that RL

finds,

n

.

(

)

{

:

}

k

Y Y k

∈

can be considered (with

all multiplicities included) as a new pattern set,

formed by a noiseless version of patterns in

X

.

So, if applying a second time RL to

Y

, by using

equation (5.1) to build a new matrix

W

Y

, better

results are expected than in the first iteration,

since the algorithm is working with a more refined

pattern set.

This whole process can be repeated iteratively

until a given stop criterion is satisfied. For example,

when two consecutive classifications assign each

pattern to the same cluster.

(

)

:= {

:

}

=

has been consid-

ered. The parameter of learning reinforcement

has been chosen

1.=

. It has been observed

that similar results are obtained for a wide range

of values of b.

The results obtained in our experiments are

shown in Table 1. It can be observed not only the

{1,

,10}

Search WWH ::

Custom Search