Biomedical Engineering Reference

In-Depth Information

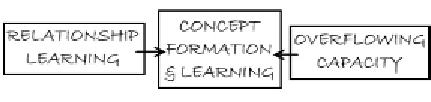

Figure 2. Scheme of the relationship between RL and overflowing network capacity

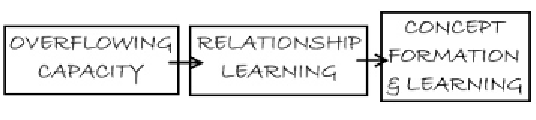

Figure 3. Scheme for the formation and learning of concepts

So, the fact of

w

i,j

and ∆

W

i,j

having the same

signum is a clue of a relationship that is repeated

between components

i

and

j

of patterns

X

1

and

X

2

. In order to reinforce the learning of this rela-

tionship, we propose a novel technique, present-

ing also another kind of desirable behavior: The

model proposed before, given by equation (3.1),

is totally independent of the order in which pat-

terns are presented to the net. This fact does not

actually happen in the human brain, since every

new information is analyzed and compared to data

and concepts previously learned and stored.

So, to simulate this kind of learning, a method

named RL is presented:

W

, and pattern

X

+

is to be loaded, matrix ∆

W

(corresponding to

1

X

+

) is computed and then

the new learning rule given by equation (5.1) is

applied.

It must be noted that this method satisfy Hebb's

postulate of learning quoted before.

This learning reinforcement technique, RL,

has the advantage that it is also possible to learn

patterns one by one or by blocks, by analyzing

at a time a whole set of patterns, and compar-

ing the resulting ∆

W

to the already stored in the

net. Then, for instance, if

1

X X

has already

been loaded into the net in terms of matrix

W

,

we can load a whole set

{

,

,

}

1

R

Y

by computing

{

Y

,

,

}

1

M

∆ =

∑

M

and then applying equation

W

(

f

(

y

,

y

))

ki

kj

i j

,

Let us multiply by a constant,

>

, the compo-

nents of matrices

W

and ∆

W

where the equality of

signum is verified, i.e., the components verifying

k

=

1

(5.1).

As shown in Figure 2, RL and the capacity of

the network are related via their ability to make the

learner (the neural network, or the human brain,

whatever the case) form and learn concepts.

With this idea in mind, one can think that

what the capacity overflow actually induces is

the learning of the existing relationships between

patterns: as explained in Figure 1, several local

minima, representing stored patterns, are merged

when network capacity is overflowed. This implies

a better recognition of the relationship between

similar patterns, which usually represent the same

⋅∆ >

. Hence the weight matrix learned by

the network is, after loading pattern

X

2

:

w

W

0

i j

,

i j

,

w

+ ∆

W

if

w

⋅∆

W

< 0

i j

,

i j

,

i j

,

i j

,

′

w

=

i j

,

[

w

+ ∆

W

]

if

w

⋅∆

W

> 0

i j

,

i j

,

i j

,

i j

,

(5.1)

X X X

already stored in the network, in terms of matrix

Similarly,iftherearesomepatterns

{

,

,

,

}

1

2

R

Search WWH ::

Custom Search