Database Reference

In-Depth Information

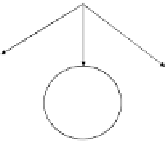

YRSJOB

<3

3.5

≥

PAYINC

I. Rate

20%

<20%

≥

P

N

P

DEPEND

N

>0

=0

P

N

Fig. 1.5 Actual behavior of customer.

“decision” nodes). In a decision tree, each internal node splits the instance

space into two or more sub-spaces according to a certain discrete function

of the input attributes values. In the simplest and most frequent case, each

test considers a single attribute, such that the instance space is partitioned

according to the attributes value. In the case of numeric attributes, the

condition refers to a range.

Each leaf is assigned to one class representing the most appropriate

target value. Alternatively, the leaf may hold a probability vector (anity

vector) indicating the probability of the target attribute having a certain

value. Figure 1.6 describes another example of a decision tree that predicts

whether or not a potential customer will respond to a direct mailing.

Internal nodes are represented as circles, whereas leaves are denoted as

triangles. Two or more branches may grow out from each internal node.

Each node corresponds with a certain characteristic and the branches

correspond with a range of values. These ranges of values must be

mutually exclusive and complete. These two properties of

disjointness

and

completeness

are important since they ensure that each data instance is

mapped to one instance.

Instances are classified by navigating them from the root of the tree

down to a leaf according to the outcome of the tests along the path. We

start with a root of a tree; we consider the characteristic that corresponds

Search WWH ::

Custom Search