Database Reference

In-Depth Information

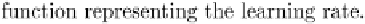

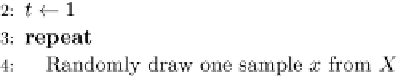

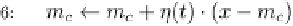

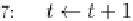

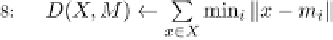

Fig. 14.4 Algorithm for fuzzifying numeric attributes.

The only parameters that need to be determined are the set of

k

centers

M

=

. The centers can be found using the algorithm presented

in Figure 14.4. Note that in order to use the algorithm, a monotonic

decreasing learning rate function should be provided.

{

m

1

,...,m

k

}

14.6.2

Inducing of Fuzzy Decision Tree

The induction algorithm of fuzzy decision tree is presented in Figure 14.5.

The algorithm measures the classification ambiguity associated with each

attribute and splits the data using the attribute with the smallest classifica-

tion ambiguity. The classification ambiguity of attribute

a

i

with linguistic

terms

v

i,j

,j

=1

,...,k

on fuzzy evidence

S

, denoted as

G

(

a

i

|

S

), is the

weighted average of classification ambiguity calculated as:

k

G

(

a

i

|

S

)=

w

(

v

i,j

|

S

)

·

G

(

v

i,j

|

S

)

,

(14.8)

j

='1

where

w

(

v

i,j

|

S

) is the weight which represents the relative size of

v

i,j

and

is defined as:

M

(

v

i,j

|

S

)

w

(

v

i,j

|

S

)=

k

S

)

.

(14.9)

M

(

v

i,k

|

S

)=

g

(

p

(

C|v

i,j

)), which is measured based on the possibility distribution vector

p

(

C|v

i,j

)=(

p

(

c

1

|

The

classification ambiguity of

v

i,j

is defined

as

G

(

v

i,j

|

v

i,j

)

,...,p

(

c

|

k

|

|

v

i,j

))

.

Search WWH ::

Custom Search