Database Reference

In-Depth Information

YRSJOB

<2

2

≥

Option

Node

LT V

≥

75%

<75%

MARST

M

A

DEPEND

>0

=0

D

A

M

D

A

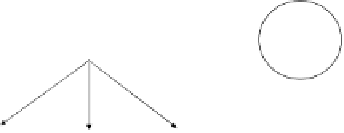

Fig. 11.3

Illustration of option tree.

Figure 11.3 illustrates an option tree. Recall that the task is to classify

mortgage applications into: approved (“A”), denied (“D”) or manual

underwriting (“M”). The tree looks like any other decision tree with a

supplement of an option node, which is denoted as a rectangle. If years at

current job (YRSJOB) is greater than or equals two years, then there are

two options to choose from. Each option leads to a different subtree that

separately solve the problem and make a classification.

In order to classify a new instance with an option tree, it is required to

weight the labels predicted by all children of the option node. For example,

in order to classify an instance with YRSJOB=3, MARST=“Married” and

DEPEND=3, we need to combine the fifth leaf and the sixth leaf (from left

to right), resulting in the class “A” (since both are associated with class

“A”). On the other hand, in order to classify an instance with YRSJOB=3,

MARST=“Single” and DEPEND=3, then we need to combine the fourth

leaf and sixth leaf, resulting in either class “A” or “D”. Since in simple

majority voting, the final class is selected arbitrarily, it never makes sense

to create an option node with two children. Consequently the minimum

number of choices for an option node is three. Nevertheless, assuming that

a probability vector is associated with each leaf, it is possible to use a

Bayesian combination to obtain a non-arbitrary selection. In fact, the model

Search WWH ::

Custom Search