Database Reference

In-Depth Information

In fact bagging can be considered to be wagging with allocation of

weights from the Poisson distribution (each instance is represented in the

sample a discrete number of times). Alternatively, it is possible to allocate

the weights from the exponential distribution, because the exponential

distribution is the continuous valued counterpart to the Poisson distribution

[

Webb (2000)

]

.

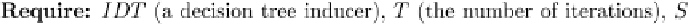

9.4.2.3

Random Forest

A Random Forest ensemble uses a large number of individual, unpruned

decision trees which are created by randomizing the split at each node of

the decision tree [Breiman (2001)]. Each tree is likely to be less accurate

than a tree created with the exact splits. But, by combining several of

these “approximate” trees in an ensemble, we can improve the accuracy,

often doing better than a single tree with exact splits.

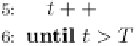

The individual trees are constructed using the algorithm presented

in Figure 9.13. The input parameter

N

represents the number of input

variables that will be used to determine the decision at a node of the tree.

This number should be much less than the number of attributes in the

training set. Note that bagging can be thought of as a special case of random

forests obtained when

N

is set to the number of attributes in the original

training set. The

IDT

in Figure 9.13 represents any top-down decision tree

induction algorithm with the following modification: the decision tree is

not pruned and at each node, rather than choosing the best split among all

attributes, the

IDT

randomly samples

N

of the attributes and chooses the

best split from among those variables. The classification of an unlabeled

instance is performed using majority vote.

Fig. 9.13 The random forest algorithm.

Search WWH ::

Custom Search