Database Reference

In-Depth Information

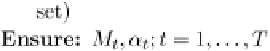

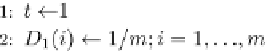

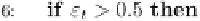

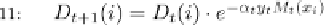

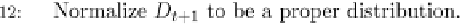

Fig. 9.8 The AdaBoost algorithm.

For using the boosting algorithm with decision trees, the decision

tree inducer should be able to handle weighted instances. Some decision

trees inducers (such as C4.5) can provide different treatments to different

instances. This is performed by weighting the contribution of each instance

in the analysis according to a provided weight (between 0 and 1). If weighted

instances are used, then one may obtain probability vectors in the leaf nodes

that consist of irrational numbers. This can be explained by the fact that

counting weighted instances is not necessarily summed up with an integer

number.

The basic AdaBoost algorithm, described in Figure 9.8, deals with

binary classification. Freund and Schapire (1996) describe two versions

of the AdaBoost algorithm (AdaBoost.M1, AdaBoost.M2), which are

equivalent for binary classification and differ in their handling of mul-

ticlass classification problems. Figure 9.9 describes the pseudo-code of

AdaBoost.M1. The classification of a new instance is performed according

to the following equation:

,

1

β

t

H

(

x

) = argmax

y∈dom

(

y

)

log

(9.17)

t

:

M

t

(

x

)=

y

where

β

t

is defined in Figure 9.9.

Search WWH ::

Custom Search