Database Reference

In-Depth Information

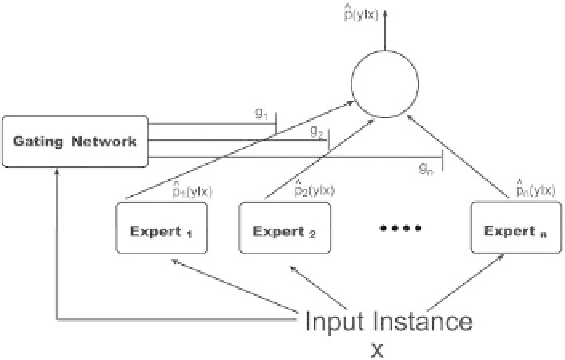

Fig. 9.3

Illustration of

n

-expert structure.

9.3.1.12

Gating Network

Figure 9.3 illustrates an

n

-expert structure. Each expert outputs the

conditional probability of the target attribute given the input instance.

A gating network is responsible for combining the various experts by

assigning a weight to each network. These weights are not constant but

are functions of the input instance

x

. The gating network selects one or a

few experts (classifiers) which appear to have the most appropriate class

distribution for the example. In fact, each expert specializes on a small

portion of the input space.

An extension to the basic mixture of experts, known as hierarchical

mixtures of experts (HME), has been proposed in

[

Jordan and Jacobs

(1994)

]

. This extension decomposes the space into sub-spaces, and then

recursively decomposes each sub-space into sub-spaces.

Variations of the basic mixture of experts methods have been developed

to accommodate specific domain problems. A specialized modular networks

called the Meta-

p

i

network has been used to solve the vowel-speaker prob-

lem

[

Hampshire and Waibel (1992); Peng

et al

. (1996)

]

. There have been

other extensions, such as nonlinear gated experts for time-series

[

Weigend

et al

. (1995)

]

; revised modular network for predicting the survival of AIDS

patients

[

Ohno-Machado and Musen (1997)

]

; and a new approach for

combining multiple experts for improving handwritten numeral recognition

[

Rahman and Fairhurst (1997)

]

.

Search WWH ::

Custom Search