Database Reference

In-Depth Information

Tabl e 9. 1

(

Continued

)

Dur Wage

Stat

Vac

Dis Dental

Ber Health

Class

2

4.5

11

average

?

full

yes

full

good

2

4.6

?

?

yes

half

?

half

good

2

5

11

below

yes

?

?

full

good

2

5.7

11

average

yes

full

yes

full

good

2

7

11

?

yes

full

?

?

good

3

2

?

?

yes

half

yes

?

good

3

3.5

13

generous

?

?

yes

full

good

3

4

11

average

yes

full

?

full

good

3

5

11

generous

yes

?

?

full

good

3

5

12

average

?

half

yes

half

good

3

6

9

generous

yes

full

yes

full

good

Bad

Good

0.171

0.829

2.65

Bad

Good

?

0

1

<2.65

Bad

Good

0.867

0.133

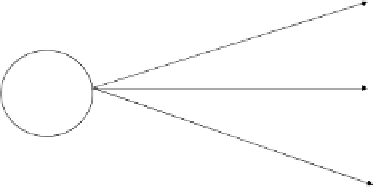

Fig. 9.1 Decision stump classifier for solving the labor classification task.

•

Dis — employer's help during employee longterm disability

•

Dental — contribution of the employer towards a dental plan

•

Ber — employer's financial contribution in the costs of bereavement

•

Health — employer's contribution towards the health plan.

Applying a Decision Stump inducer on the Labor dataset results in the

model that is depicted in Figure 9.1. Using 10-folds cross-validation, the

estimated generalized accuracy of this model is 59

.

64%.

Next, we execute an ensemble algorithm using Decision Stump as the

base inducer and train four decision trees. Specifically we use the Bagging

(bootstrap aggregating) ensemble method. Bagging is simple yet effective

method for generating an ensemble of classifiers or in this case forest of

decision trees. Each decision tree in the ensemble is trained on a sample of

instances taken with replacement (allowing repetitions) from the original

training set. Consequently, in each iteration some of the original instances

Search WWH ::

Custom Search