Game Development Reference

In-Depth Information

… none of those are at all human, and therefore any decision based on that

model is bound to be flawed in its efforts to

look

human (or animal, etc.).

If we swap a few things into those four items, however, we can start to sense a

model that is a bit more like what we encounter in reality.

Has

some of

the relevant information available

Is able to perceive the information—albeit with

some inaccuracies

Is able to perform the calculations necessary

within a margin of error

Makes decisions that involve factors

other than

perfect rationality

In each of the italicized areas above, we have washed out some of the perfect

computational ability that computers just happen to be good at. In its place, we

now have some more fuzzy ideas and nebulous concepts. How do we know

how

much

information to make available? How do we construct

some inaccuracies

in the

perception? How do we insert a

degree of error

into calculations? How much error?

And what sorts of factors do we include other than perfect rationality? For that

matter, what other factors

are there?

This is where we can put

positive

or

descriptive

decision theory to work. By

starting with the raw logic of what they

should

do, applying an analysis of what

people, animals, or even orcs

tend to

do, we can build models that guide what they

will

do in our games (Figure 4.3).

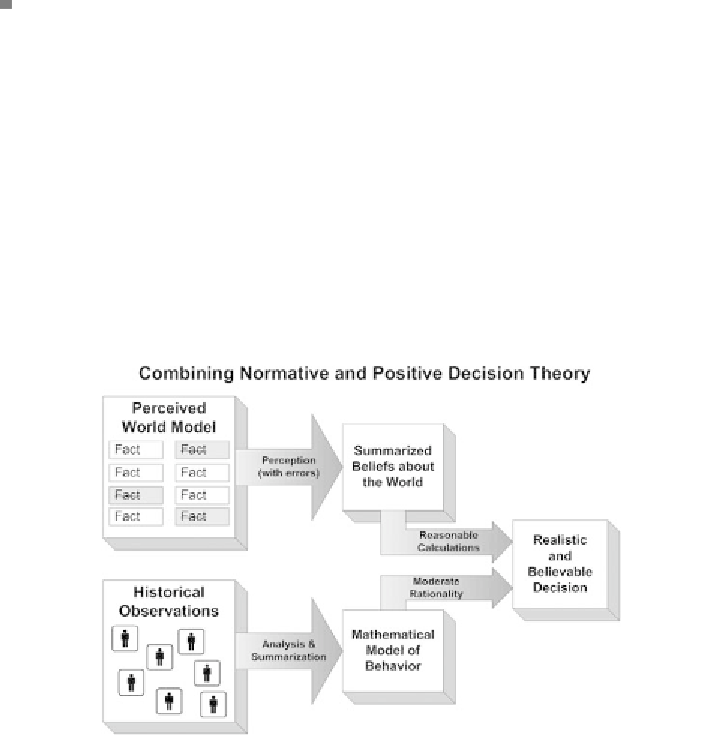

FIGURE 4.3

Combining normative and positive decision theory takes the

limited world model perceived by an agent, adds the potential for errors, and

creates a belief about the world. When combined in a moderately rational fashion

with a behavior model constructed from observations of what people tend to do,

it yields varied, yet believable realistic behaviors.